KAIST Develops Technology to Reduce LLM Infrastructure Costs

- Input

- 2025-12-28 12:00:00

- Updated

- 2025-12-28 12:00:00

[Financial News] Researchers at Korea Advanced Institute of Science and Technology (KAIST) have developed a technology that enables more affordable AI services by reducing reliance on expensive data center Graphics Processing Units (GPUs) and utilizing less costly GPUs available nearby.

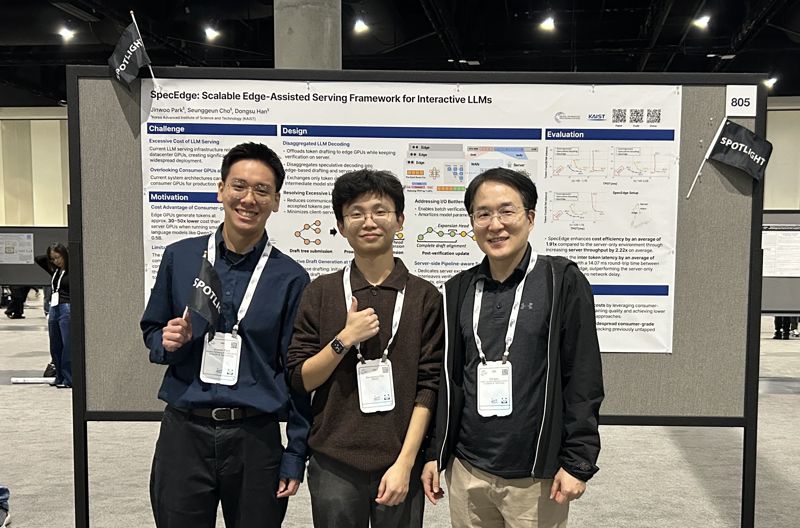

On the 28th, KAIST announced that Professor Han Dongsu’s research team from the School of Electrical Engineering has developed a new technology called 'SpecEdge' that significantly lowers Large Language Model (LLM) infrastructure costs by leveraging widely distributed consumer-grade GPUs outside of data centers. Until now, most LLM-based AI services have depended on high-priced data center GPUs, resulting in high operational costs and significant barriers to entry for AI technology.

The newly developed SpecEdge allows data center GPUs and 'Edge GPUs' installed in personal PCs or small servers to share roles in building LLM inference infrastructure. Applying this technology reduced the cost per token—the smallest unit generated by AI—by approximately 67.6% compared to using only data center GPUs.

The research team employed a method called 'Speculative Decoding.' In this approach, a small language model deployed on an Edge GPU quickly generates highly probable token sequences (words or word fragments in order), which are then batch-verified by the large language model in the data center. During this process, the Edge GPU continues generating tokens without waiting for the server’s response, improving both LLM inference speed and infrastructure efficiency.

Compared to performing Speculative Decoding solely on data center GPUs, this approach improved cost efficiency by 1.91 times and server throughput by 2.22 times. Notably, it operates reliably even at typical internet speeds, confirming that the technology can be applied to real-world services without the need for specialized network environments.

Additionally, the server is designed to efficiently handle verification requests from multiple Edge GPUs, enabling simultaneous processing of more requests without GPU idle time. This demonstrates an LLM serving infrastructure that utilizes data center resources more efficiently.

If expanded to various edge devices such as smartphones, personal computers, or Neural Processing Units (NPUs), it is expected that high-quality AI services could be provided to a broader range of users.

Professor Han Dongsu stated, “Our goal is to utilize edge resources near users, beyond just data centers, as part of the LLM infrastructure. Through this, we aim to reduce the cost of providing AI services and create an environment where anyone can access high-quality AI.”

This research was presented as a Spotlight (top 3.2% of papers, acceptance rate 24.52%) at the Conference on Neural Information Processing Systems (NeurIPS), the world’s leading AI conference, held in San Diego from December 2 to 7.

jiany@fnnews.com Yeon Ji-an Reporter