Nvidia Accelerates 'NEXA AI' Local Agent Support on RTX PCs

- Input

- 2025-11-13 09:14:54

- Updated

- 2025-11-13 09:14:54

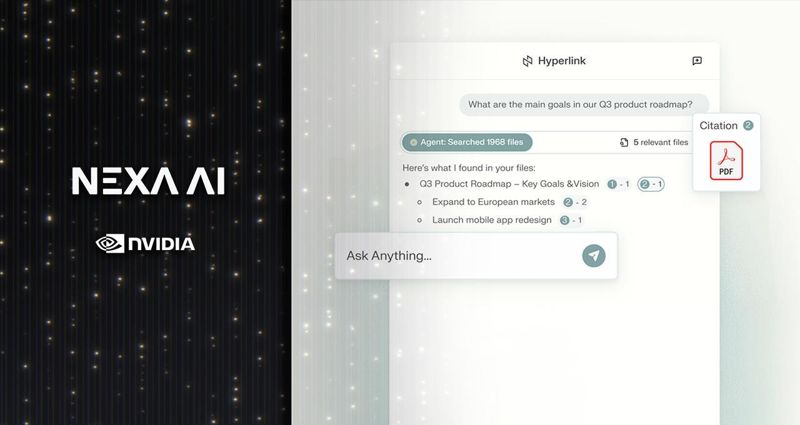

[Financial News] Nvidia announced on the 13th that it is officially supporting the local artificial intelligence (AI) agent Hyperlink on RTX AI PCs in collaboration with NEXA INTELLIGENCE.

AI assistants based on Large Language Models (LLM) are powerful productivity tools. However, without sufficient context and information, they may struggle to provide accurate and relevant answers. Most LLM-based chat applications allow users to supplement context by uploading certain files, but they often cannot access a variety of information stored on the user's PC, such as slides, notes, PDFs, or photos.

Hyperlink by NEXA INTELLIGENCE is a local AI agent designed to address these issues. Hyperlink quickly indexes thousands of files, understands the user's intent behind questions, and delivers tailored insights based on context.

The newly released version includes acceleration features for RTX AI PCs, tripling the speed of Retrieval-Augmented Generation (RAG) indexing. For example, a 1GB folder that previously took about 15 minutes to index can now be ready for search in just 4 to 5 minutes. In addition, LLM inference speed has doubled, further reducing response times to user queries.

Hyperlink leverages generative AI to accurately retrieve information from thousands of files, understanding not just keywords but also the intent and context of user queries.

To achieve this, it converts all user-designated local files—from small folders to every file on the computer—into searchable indexes. Users can simply describe what they are looking for in natural language, and Hyperlink will find relevant information across documents, slides, PDFs, images, and more.

For instance, if a user requests information to write a report comparing the themes of two science fiction novels, Hyperlink can locate relevant content even if the file is simply named 'Literature_Assignment_Final.docx.'

By combining search capabilities with accelerated LLM inference on RTX, Hyperlink answers questions based on insights drawn from the user's files. It connects ideas from various sources, identifies relationships between documents, and generates logical responses with clear source citations.

All user data is stored only on the device and remains private. Nvidia emphasized that personal files are never transmitted outside the computer, ensuring sensitive information is not leaked to the cloud.

mkchang@fnnews.com Jang Min-kwon Reporter