Kakao Releases Two Open Source Models: 'Lightweight Multimodal·MoE Kanana'

- Input

- 2025-07-24 09:43:28

- Updated

- 2025-07-24 09:43:28

[Financial News] Kakao announced on the 24th that it has released the highest performing lightweight multimodal language model among domestic open models, along with the country's first expert mixture model (MoE) as open source.

Kakao released the lightweight △multimodal language model ‘Kanana-1.5-v-3b’ and △MoE (Mixture of Experts) language model ‘Kanana-1.5-15.7b-a3b’ through the open source community Hugging Face, equipped with image information understanding and instruction execution capabilities.

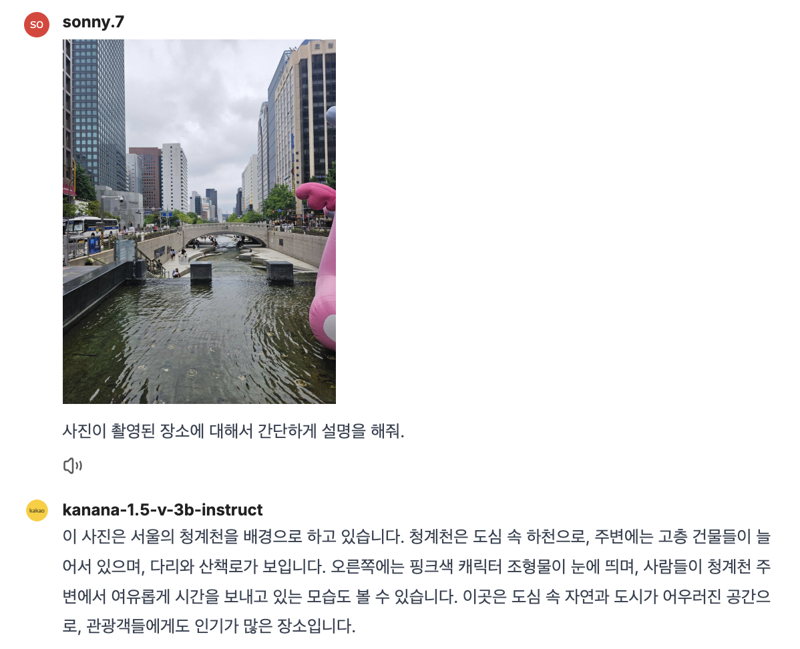

First, ‘Kanana-1.5-v-3b’ is a multimodal language model that can process not only text but also image information, based on the Kanana 1.5 model released as open source at the end of May.

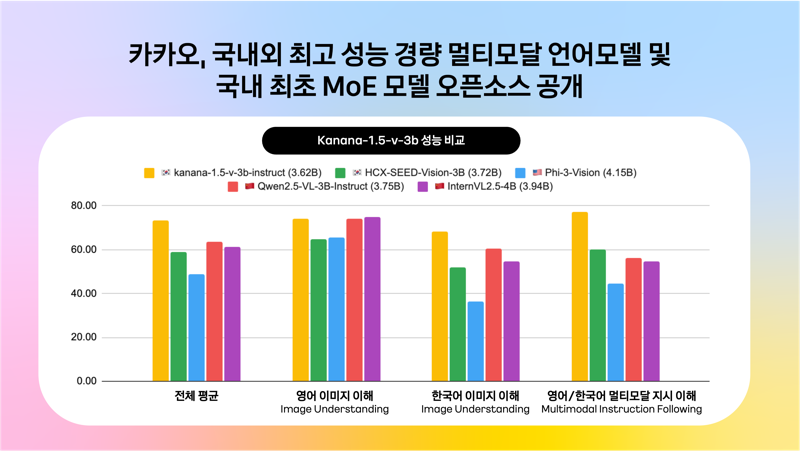

Kakao explained that the multimodal language model ‘Kanana-1.5-v-3b’ is characterized by its high instruction execution performance and excellent Korean and English image understanding capabilities, accurately understanding user question intentions. Despite being a lightweight model, its ability to understand Korean and English documents expressed in images is outstanding enough to be compared to the global multimodal language model GPT-4o.

Also, it recorded the highest score when compared to similar-sized domestic and international open models in the Korean benchmark, and showed similar performance levels when compared to overseas open source models in various English benchmarks. In the instruction execution ability benchmark, it recorded 128% performance compared to similar-sized multimodal language models released domestically.

The MoE model ‘Kanana-1.5-15.7b-a3b’ differs from existing models where all parameters participate in computation during input data processing, by activating only some expert models optimized for specific tasks, which is advantageous for efficient computing resource utilization and cost reduction.

This model was developed based on Kakao's 3B scale model ‘Kanana-Nano-1.5-3B’ to save model training time and costs. Although the activated parameters are only 3B, its performance was equal to or better than ‘Kanana-1.5-8B’.

Kakao's MoE model can assist companies or research developers aiming to build high-performance AI infrastructure at low cost. Especially, due to its structural characteristic of using limited parameters during inference, it is advantageous for implementing low-cost, high-efficiency services, increasing its utility.

Kakao plans to set a new standard in the AI model ecosystem through the open source release of this lightweight multimodal language model and MoE model, providing a foundation for more researchers and developers to freely utilize efficient and powerful AI technology.

Furthermore, it aims to continuously enhance models based on its own technology, and challenge the development of ultra-large models at the global flagship level through model scale-up, contributing to the independence and technological competitiveness of the domestic AI ecosystem.

Byunghak Kim, Kakao Kanana Performance Leader, said, “This open source release is a meaningful achievement in technology development in terms of cost efficiency and performance, and it meets the dual objectives of service application and technological independence, beyond mere model architecture advancement.”

wongood@fnnews.com Joo Won-kyu Reporter