Naver Cloud, Free Commercial Release of Inference Model... Expanding the Domestic AI Ecosystem

- Input

- 2025-07-22 10:00:29

- Updated

- 2025-07-22 10:00:29

[Financial News] Naver Cloud announced on the 22nd that it has released its lightweight inference model 'HyperCLOVA X SEED 14B Think', developed 'from scratch' using its proprietary technology, as a free open source for commercial use.

Unlike modified overseas open-source models, this AI, developed by combining inference capabilities and lightweight technology with original technology, is expected to contribute to further enhancing domestic AI capabilities. Naver Cloud has made the inference model, which is gaining attention as a core technology of AI agent services, available for business applications, not just limited to research use.

HyperCLOVA X SEED 14B Think is a lightweight model that allows the inference model HyperCLOVA X THINK, announced on June 30, to be integrated into services stably and cost-effectively. It is characterized by significantly reducing learning costs by pruning less important parameters while preserving the knowledge of the original model as much as possible, and transferring the knowledge of a large model that has lost some knowledge during the pruning process to a smaller model through distillation.

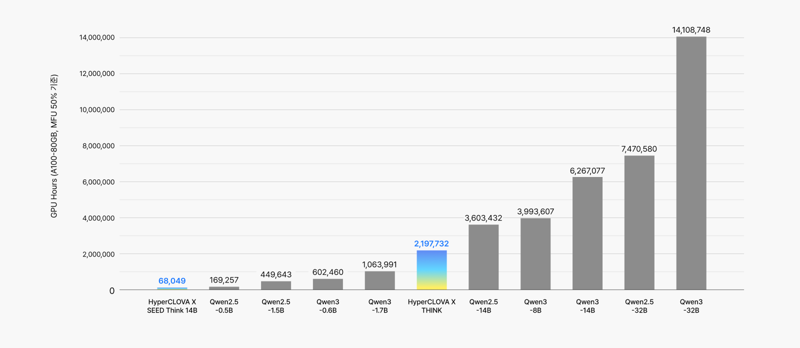

According to Naver Cloud, this model, despite being a model with 14 billion parameters, was trained at a lower cost (GPU Hours) than a global open-source model composed of 500 million parameters. Compared to a global open-source model of the same size, the learning cost per session is about 1/100.

Although trained at such low cost, the model recorded similar or higher average scores compared to models of the same size (14 billion) or relatively larger size (32 billion) in evaluations measuring performance related to Korean language, Korean culture, coding, and mathematics, proving once again the capability to build an AI model with excellent performance relative to cost and size.

Sung Nak-ho, Head of Hyper-scale AI at Naver Cloud, said, "By continuously upgrading generative AI models with proprietary technology and designing efficient learning strategies through numerous experiments and improvements, we were able to build an inference model that is more effective in terms of cost and performance at the same scale," adding, "Rather than being incorporated into foreign ecosystems with technology modified from commercial models, we hope that HyperCLOVA X, built from the ground up with proprietary technology, will lead the full-fledged growth of the Korean AI ecosystem."

Meanwhile, the three lightweight models of HyperCLOVA X, released as open source last April, surpassed 1 million cumulative downloads in July, proving the usability and popularity of the models. Based on over 50 primary derivative models, new derivative models are being produced and shared, and Korean on-device AI services are being launched, rapidly expanding the HyperCLOVA X open-source ecosystem.

yjjoe@fnnews.com Yoon-Joo Cho, Reporter